Updated 4/11/2020: Everyone is interested in how we are doing in Ohio during the COVID19 pandemic. Accordingly, I look at the data from the Ohio Department of Health and assemble it into a report for you. You can read my full report below which includes multiple graphs and tables and can download the pdf. I intend to update the pdf report each day as new data becomes available. Also, you should check back often as the information displayed will change with new data. I will also offer new items as I think of them.

Full disclaimer, I am not an expert in epidemiology nor have I attempted to model the behavior and predict the future. On LinkedIn, I have written about the importance of having a qualified subject matter expert paired with each data modeler. I am nonetheless interested in any suggestions you have. I have added a footnote to each table explaining that the definition of a case changed on April 10 from “confirmed (by a test) cases” to the “confirmed cases plus probable cases” which inflates the data by 47 cases on April 10. This to match definitions by the CDC, but worries me as to the lack of consistency before and after the change date.

First up is Weekly changes in the number of cases, hospitalizations, and deaths. A look at the number of cases shows a considerable decline in the cases. Every data point is an average of the last week of cases. When changes are on way down it suggests that the curve of the total caseload is indeed being bent.

Rates of hospitalizations and deaths are shown in the next graph. This past week Amy Acton said Ohio has tested 50,000 people and our cases are just under 6000, so that means in rough measure that of everyone tested, the large majority of are showing symptoms or clearly in harm’s way, that the positive results are that about 12 percent. That suggests the actual death rate which is 3.9% or all positive cases, maybe as low as (12%) of 3.9% or about 0.4% of all those tested and much less than the death rate out of the population of 11 million. Of course, I do not have individual testing data and this is a bit of hopeful speculation.

I also did a visualization of the hospitalization and death rates by age and sex and posted that to LinkedIn. You can access that here. Similar numbers and heatmaps are in the full report below.

I used SAS® to organize and analyze the data.

Because people are interested in how we are doing in Ohio during the COVID19 pandemic I hope this is of interest to you.

OH_report_COVID19Proper citation requested. Steven C. Myers. 2020. Ohio Covid19 report. accessed at https:econdatascience.com/COVID19 on (your access date).

Request for Comments to myers@uakron.edu

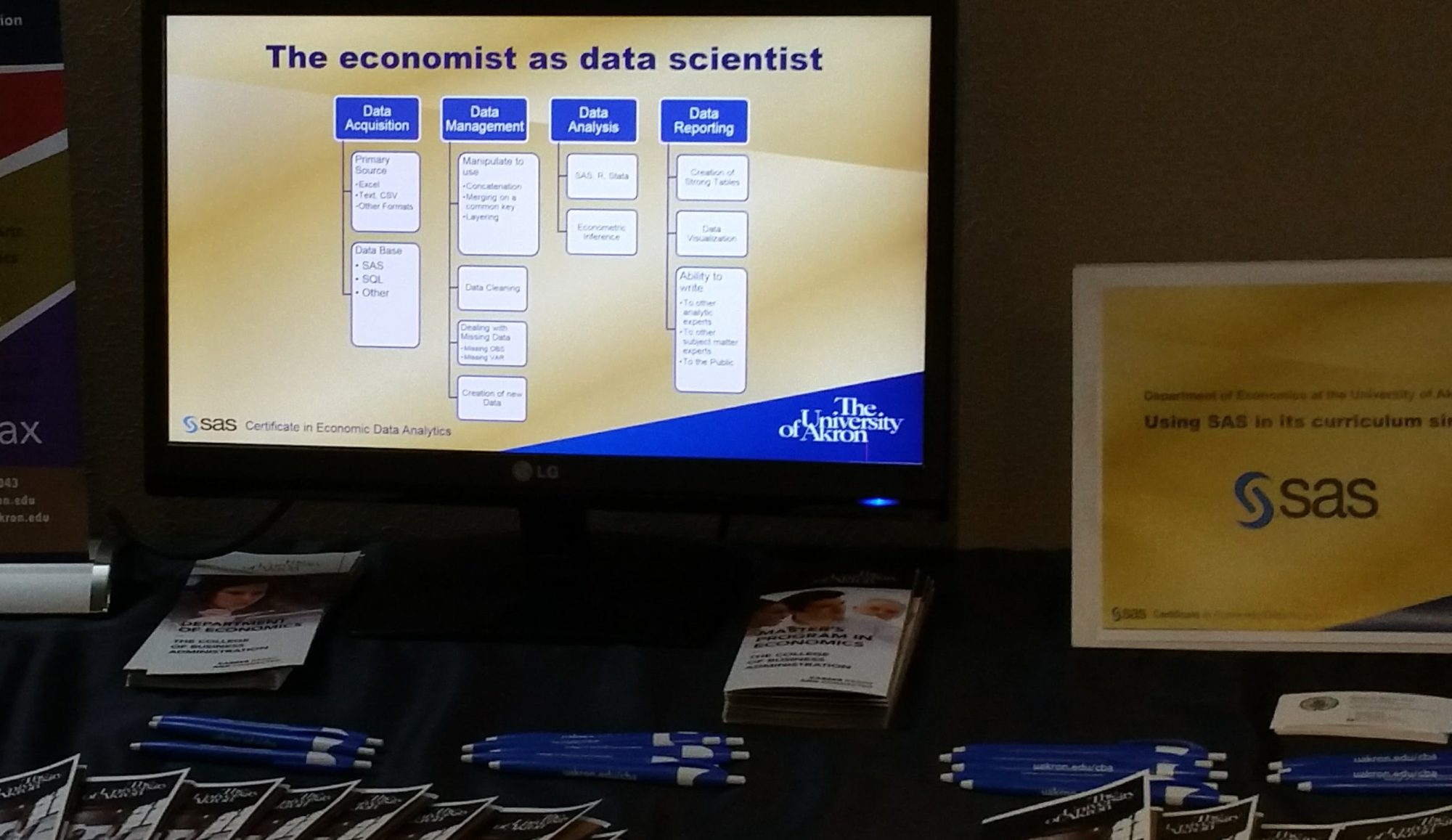

It has been 41 years since I began my academic career. I leave it at the end of this Spring semester and I will miss teaching econometrics and data science to our students. Those who know me understand my passion for SAS(r) in the econometrics curriculum and I am not dissuaded by the presence and importance of R and Python. Students who learn to program in SAS, learn far more than the analytic power of the worlds leading analytical solution. They learn in one environment how to acquire data, to manipulate and manage that data, to analyze it with powerful procedures and to visualize and report results from that data.

It has been 41 years since I began my academic career. I leave it at the end of this Spring semester and I will miss teaching econometrics and data science to our students. Those who know me understand my passion for SAS(r) in the econometrics curriculum and I am not dissuaded by the presence and importance of R and Python. Students who learn to program in SAS, learn far more than the analytic power of the worlds leading analytical solution. They learn in one environment how to acquire data, to manipulate and manage that data, to analyze it with powerful procedures and to visualize and report results from that data.

I am honored to have a version of this essay appearing in Bill Frank’s

I am honored to have a version of this essay appearing in Bill Frank’s