In my undergraduate business and economic analytics course, I have adopted Murtaza Haider‘s excellent text Getting Started with Data Science. I chose it for a lot of reasons. He is an applied econometrician so he relates to the students and me more than many authors. I truly have a very positive first impression.

Updated: November 7, 2020

On my campus you can hear economics is not part of data science, they don’t do data science, that is, data science belongs to the department of statistics (no to the engineers, to the computer science department, and on and on like that.) We have come a long way, but years ago, for example, the university launched a major STEM initiative and the organizers kept the economic department out of it even though we ask to be part of it. Of course, when they did their big role out, without our department, they brought in a famous keynote speaker who was … wait for it … an economist.

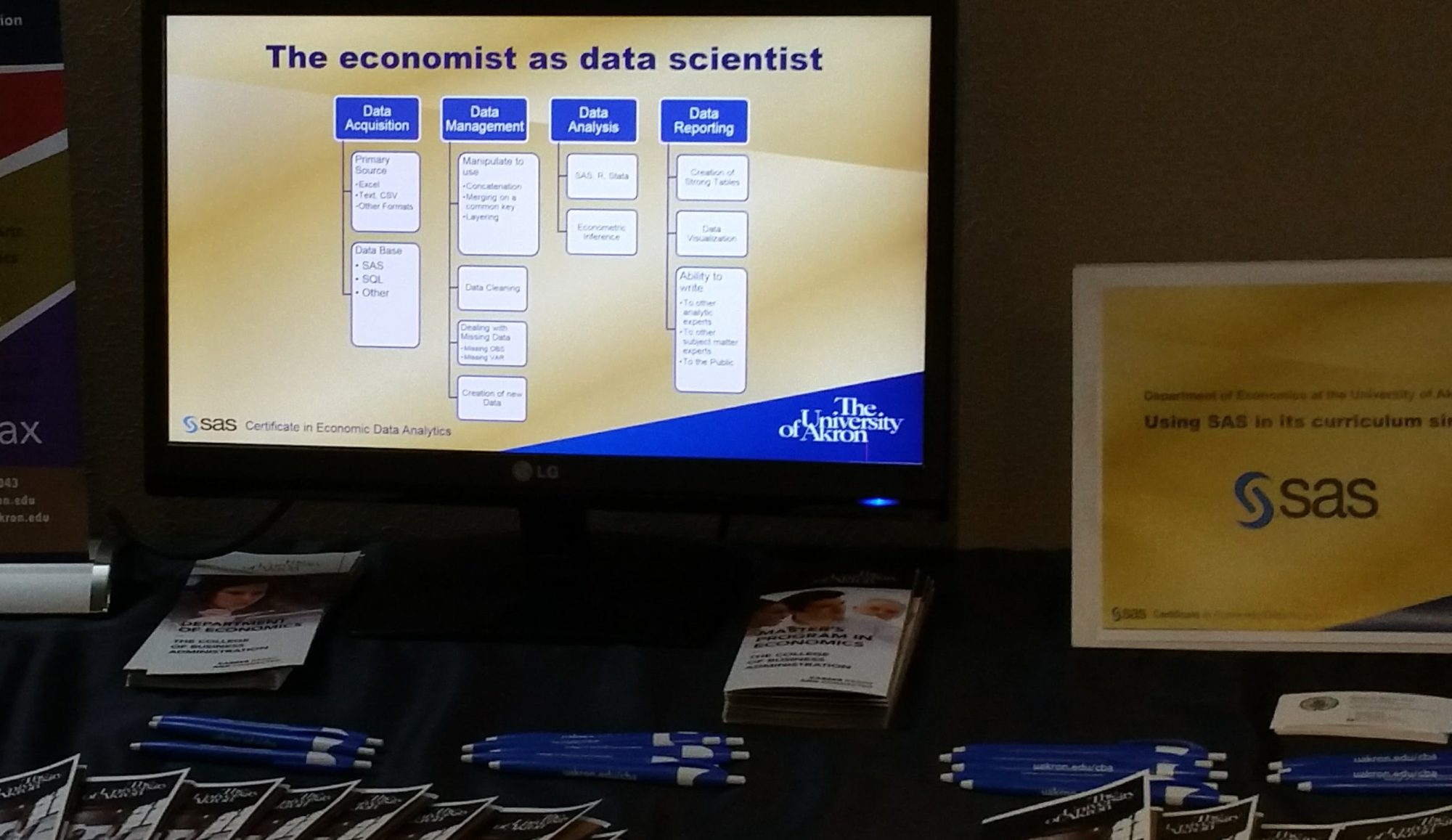

My department , just launched a Business Data Analytic economics degree in the College of Business Administration at the University of Akron. We see tech companies filling up their data science teams with economists, many with PhDs. Our department’s placements have been very robust in the analytic world of work. My concern is seeing undergraduates in economics get a start in this field. and Murtaza Haider offers a nice path.

Dr. Haider, has a Ph.D. in civil engineering, but his record is in economics, specifically in regional and urban, transportation and real-estate, and he is a columnist for the Financial Post. and I can attest to his applied econometrics knowledge based on his fine book which I explore below.

WHAT IS DATA SCIENCE

Haider has a broad idea of what is data science and follows a well-reasoned path on how to do data science. Like my approach to this class, he is heavy into visualizations through tables and graphics and while I would appreciate more design, he makes an effort to teach the communicative power of those visualizations. Also, like me, he is highly skeptical of the value of learning to appease the academic community at the expense of serving the business (non-academic) community where the jobs are. I really appreciate that part of it.

PROBLEM SOLVING AND STORYTELLING

He starts with storytelling. our department recognizes that what our economists do, what they do to bring value is they know how to solve problems and tell stories. Again this is a great first fit. He then moves to Data in a 24/7 connected world. He spends considerable time on data cleaning and data manipulation. Again I like how he wants students to use real data with all of its uncleanliness to solve problems. Chapter 3 focuses on the deliverables part of the job and again I think he is spot on.

Then through the remaining chapters he first builds up tables, then graphs, and onto advanced tools and techniques. My course will stop somewhere in the neighborhood of chapter 8.

(Update: Chapter 8 begins with the binary and limited dependent variables, and full disclosure my last course did not begin this chapter, we ended in Chapter 7 on Regression). Perhaps the professor in the next course will consider Getting Started in Data Science for Applied Econometrics II. (Update: Our breakdown in our Business Data Analytics economics degree is that Econometrics I is heavily coding and application-based, while econometrics II is a more mathematical/ theoretical based course with intensive data applications. It is a walk before you run approach, building up an understanding of analysis and data manipulation first. )

I use a lot of team-based problem-based learning in my instruction and Haider’s guidance through the text is instructing teams how to think through problems to get one of many possible solutions, not highlighting only one solution. In this way, he reinforces both creativity in problem-solving. I like what I read, I wonder what I will think after students and I go through it this term. (Update: I/we liked the text, but did not follow it page by page. The time constraint of the large data problem began to dominate and crowd out other things, hence why I did not get to Chapter 8, my proposed end. However, because in course 1 which emphasizes data results over theoretical knowledge, I was well pleased.)

PROBLEM ARTICULATION, DATA CLEANING, AND MODEL SPECIFICATION

Another reason I like the book so much is he cites Peter Kennedy, the now passed, research editor for the Journal of Economic Education. Peter was very influential on me and applied econometricians who really want to dig into the data. Most of my course is built around his work and especially around the three pillars of Applied Econometrics.: (1) the ability to articulate a problem, (2) the need to clean data, and (3) to focus deeply on model specification. He argues that most Ph.D. programs fail to teach the applied, allowing their time to focus on theoretical statistics and propertied of inferential statistics. Empirical work is often extra and conducted, even learned, outside of class. I have never taught like that (OK, maybe my first year out of my Ph.D.), but my last 40 years have been a constant striving to make sure my students are prepared for the real as opposed to the academic world. Peter made all the difference bringing my ideas into sharp focus. I like Haider’s work, Getting Started with Data Science, because it is written like someone who also holds the principles put forth by Peter Kennedy in high regard.

SOFTWARE AGNOSTIC, BUT TOO MUCH STATA AND NOT ENOUGH SAS

On page 12 he gets much credit for saying he does not choose only one software, but includes “R, SPSS, Stata and SAS.” I get the inclusion of SPSS given it is IBM Press, but there is virtually no market for Stata (or SPSS) in the state of Ohio or 100 miles around my university’s town of Akron, OH. Also, absent is python, which is in heavy use in the job market. You can see the number of job listings mentioning each program in the chart below.

I am highly impressed with Haider’s book for my course, but that does not extend to everything in the book. My biggest peeve is his heavy use of Stata. I would prefer a text that highlights the class language (SAS) more and was more sensitive to the market my students will enter.

Stata is a language adopted by nearly all professional economists in the academic space and in the journal publication space, however, I think this use is misguided when the book is to be jobs facing and not academic facing. While he shows plenty of R, there is no python and no SAS examples. All data sets are available on his useful website, but since SAS can read STATA data sets that isn’t much of a problem.

SAS Academic Specialization

Full disclosure, we are a SAS school as part of the SAS Global Academic Program and offer both a joint SAS certificate to our students as well as offering them a path to full certification.

(Update: The SAS joint certificate program has been rebranded and upgraded to the SAS Academic Specialization and is still a joint partnership between the college or university and SAS, but now in three tiers of responsibilities and benefits. We are at tier 3 and the highest level. Hit the link for more details.)

We also teach R as well in our forecasting course and students are exposed to multiple other programs over their career including SQL, Tableau, Excel (for small data handling, optimization, and charting/graphics), and more.

Buy This Book

Most typical econometric textbooks are in the multiple hundreds of dollars (not kidding) and almost none are suitable to really prepare for a job in data science. This book on Amazon is under $30 and is a great practical guide. Is it everything one needs? Of course not, but at the savings from $30 you can afford many more resources.

More SAS Examples

So it is natural given our thrust as a SAS School, that I would have preferred examples in SAS to assist the students. Nevertheless, I accepted the challenge to have students develop the SAS code to replicate examples in the book. This is a great way to avoid too much grading of assignments. Let them read Haider’s examples, say a problem that he states, and then solves with STATA. He presents both question and answer in STATA and my student’s task is to answer the problem in SAS. They can self check and rework until they come to the right numerical answer, and I am left helping only the truly lost.

Overall, I love the outline of the book. I think it fits with a student’s first exposure to data science and I will know more at the end of this term. I expect to be pleased. (Update: I was.)

If you are at all in data science and especially if you have a narrow idea that data science is only Machine Learning or big data, you need to spend time with this book, specifically read the first three chapters and I think you will have your eyes opened and a better appreciation of the field of data science.

I learned my data / statistical skepticism from a 1954 book by Darrell Huff called

I learned my data / statistical skepticism from a 1954 book by Darrell Huff called